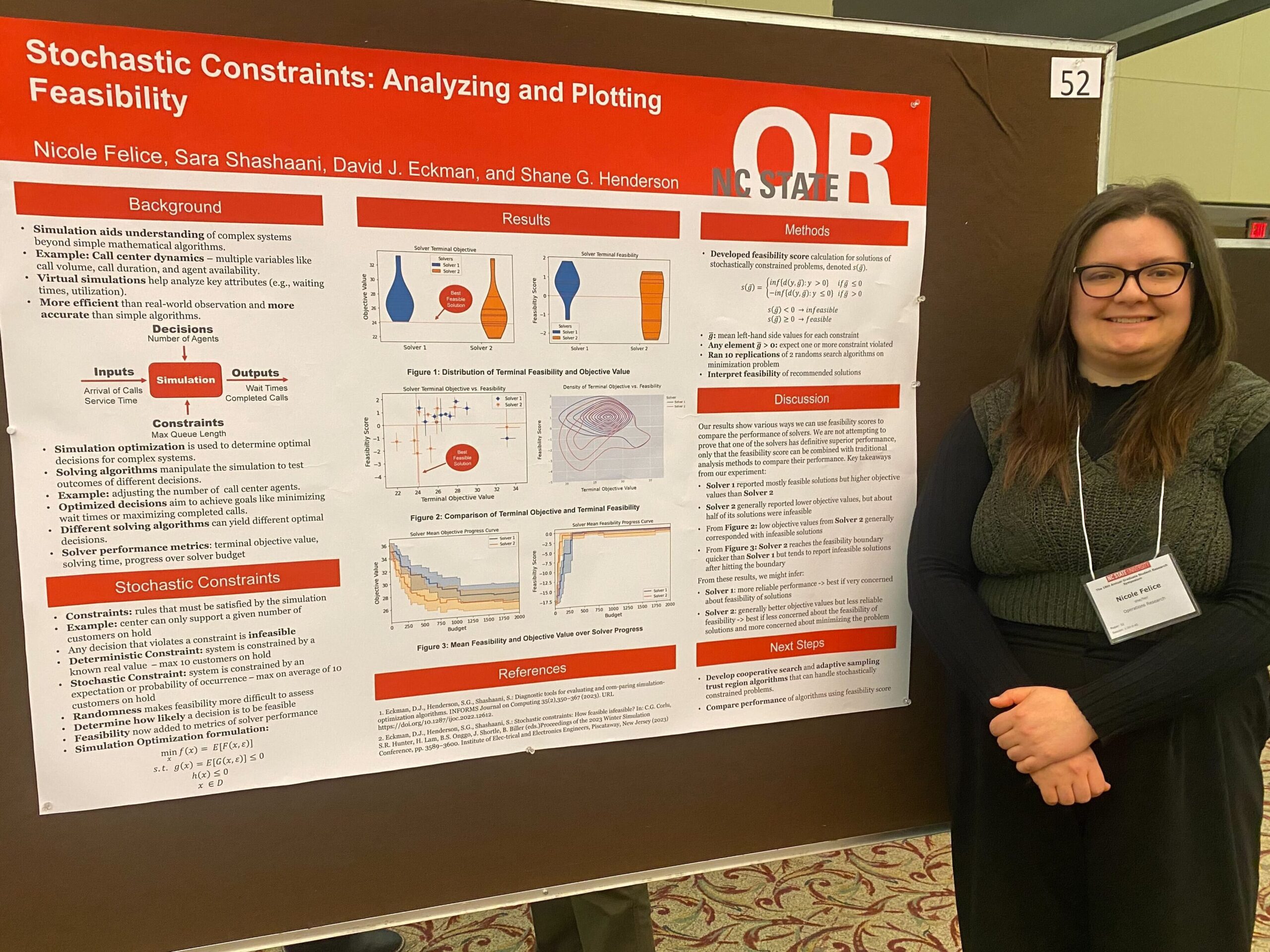

Apr 25: Felice presenting her poster on stochastic constraints at the 2025 NC State Grad Symposium

There are 32 posts filed in Research News (this is page 1 of 2).

Apr 25: Felice presenting her poster on stochastic constraints at the 2025 NC State Grad Symposium

Mar 25: Ha receives the prestigious IISE Pritsker Doctoral Dissertation Award, 2nd Place for his dissertation “Accelerating Stochastic Derivative Free Optimization“! Congratulations Yunsoo!

Oct 24: Paper “Complexity of Zeroth- and First-Order Stochastic Trust-Region Algorithms” received minor revisions from SIAM Journal of Optimization.

Oct 24: The group has five talks at the #INFORMS2024 in Seattle. Stop by if you are at the conference:

Oct 24: Two papers accepted for publication:

Sep 24: Paper by Ha, Shashaani, and Menickelly, “Two-stage Sampling and Variance Modeling for Variational Quantum Algorithms“, accepted for publication in INFORMS Journal on Computing.

Aug 24: Shashaani’s “Simulation Optimization: Introductory Tutorial on Methodology” to appear on 2024 Proceedings of Winter Simulation Conference. The work differs from many other simulation optimization tutorials in its focus.

Jun 2024: Four papers, on nested partitioning, digital twins, data farming for optimization, and new metrics for distributionally robust optimization, were accepted for publication in 2024 Proceedings of Winter Simulation Conference.

May 24: Shashaani is awarded a grant for a three-year project on “Fast and Scalable Stochastic Derivative-free Optimization” from Office of Naval Research. Shashaani will lead this effort seeking to excel stochastic optimization in theory and practice for black-box noisy problems of large scales.

Apr 24: The group had a good night at the 2024 ISE CA Anderson Awards.

Ha received the ISE Distinguished Dissertation Award, which recognizes recent PhD graduates who have written original, innovative dissertations that reflect outstanding technical contributions and are likely to have significant scientific and societal impact! He will be the department’s nominee for the IISE Pritsker Dissertation Award and/or INFORMS Dantzig Dissertation Award. (Jeon-Ha’s friend, teammate and basketball buddy) received the award on Ha’s behalf as he was traveling. Congratulations Yunsoo!!

Shashaani received the ISE Faculty Scholar Award, which recognizes current ISE (tenure track) assistant and associate professors for excellence in research, teaching, and service over the past three calendar years and the potential for becoming a University Faculty Scholar.

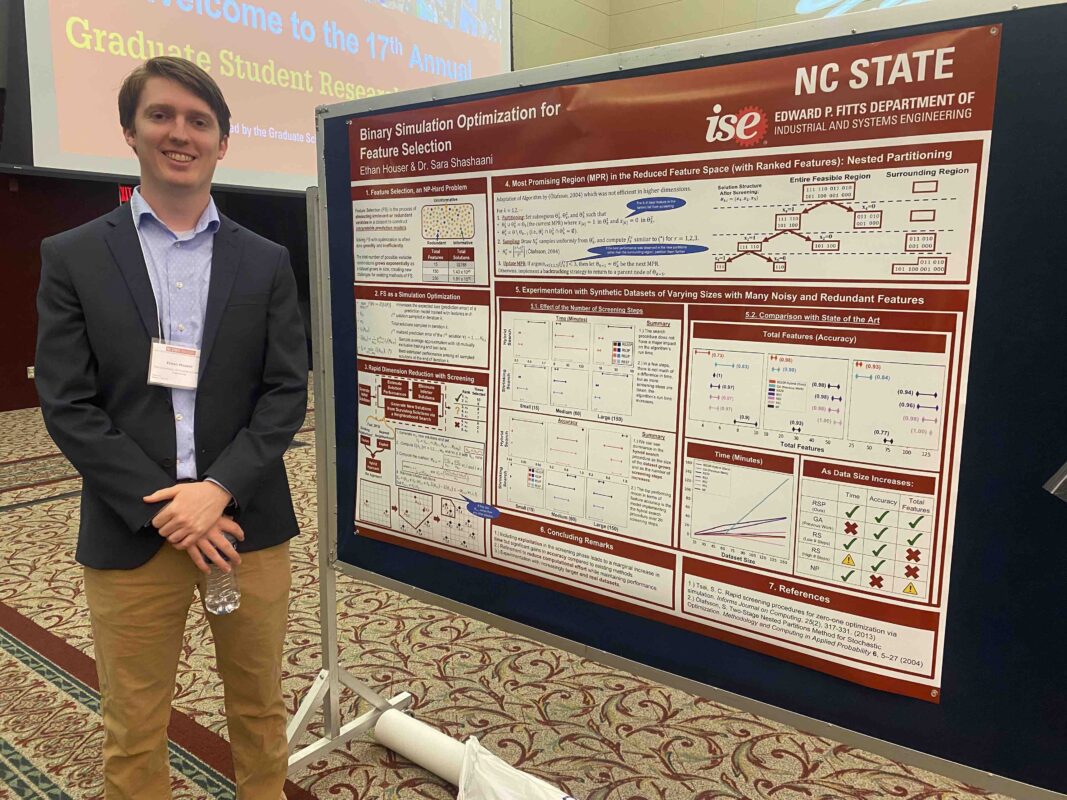

Apr 24: Houser presents his research on robust and fast feature selection with screening and partitioning methods at the 2024 NC State Graduate Student Research Symposium. His poster will be added to the groups website!

Mar 24: Ha presented First Order Trust Region Methods with Adaptive Sampling at the 2nd INFORMS Optimization Society’s Conference in Houston, TX.

Mar 24: Paper “Iteration Complexity and Finite-Time Efficiency of Adaptive Sampling Trust-Region Methods for Stochastic Derivative-Free Optimization” accepted for publication in IISE Transactions.

Jan 24: New paper “Robust Variational Quantum Algorithms Incorporating Hamiltonian Uncertainty” submitted.

Jan 24: New paper “Simulation Model Calibration with Dynamic Stratification and Adaptive Sampling” submitted.

Nov 2023: New paper “Statistical Inference on Simulation Output: Batching as an Inferential Device” with Y. Jeon and R. Pasupathy submitted.

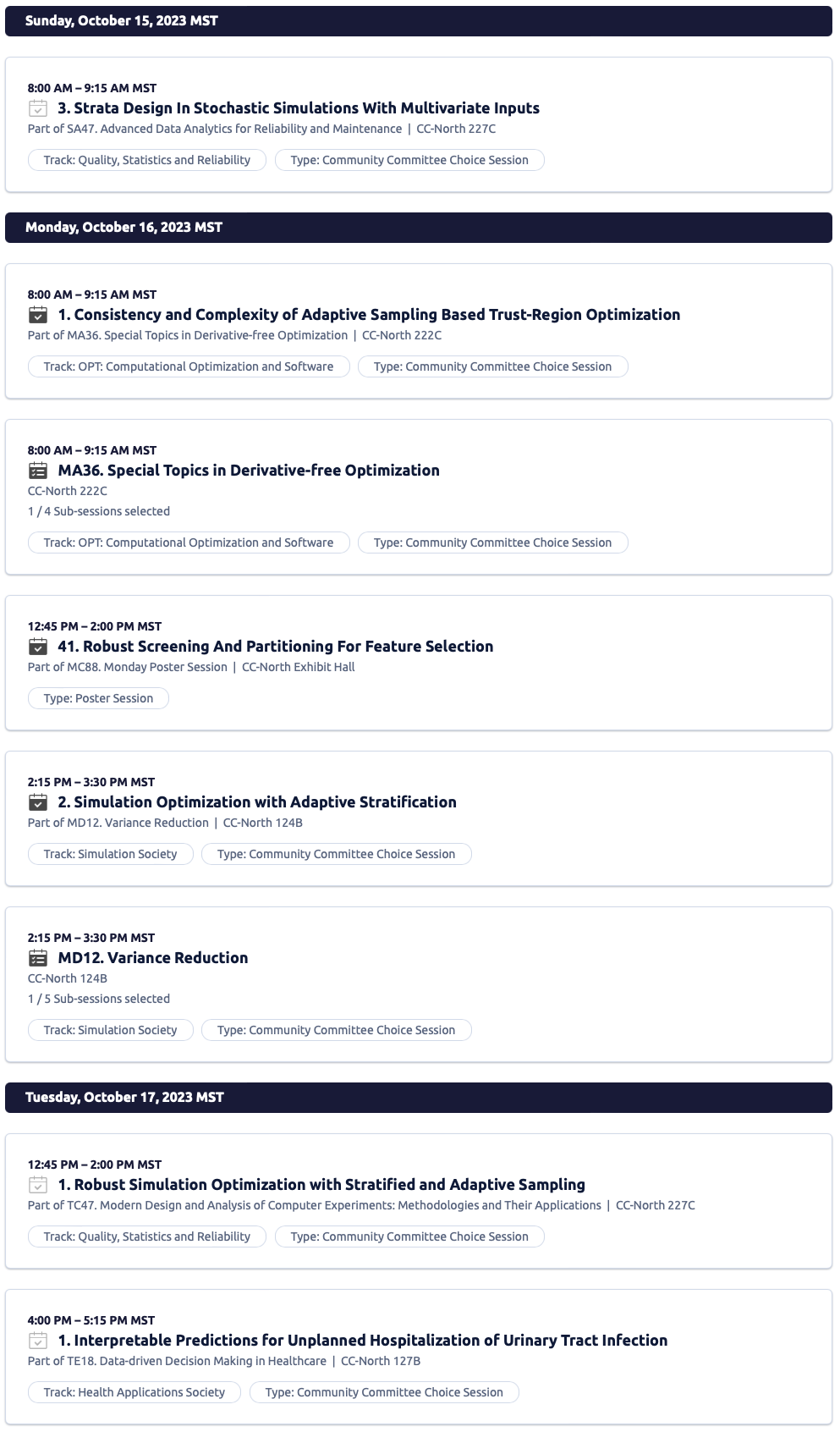

Oct 2023: Shashaani’s group have 5 presentations at the 2023 INFORMS annual meeting:

Aug 23: Paper “On Common-Random-Numbers and the Complexity of Adaptive Sampling Trust-Region Methods” with Ha and Pasupathy now available on optimization-online.

Jul 23: Paper “Risk Score Models for Unplanned Urinary Tract Infection Hospitalization” with Alizadeh, Vahdat, Ozaltin, and Swann submitted.

Jul 23: Paper “Building Trees for Probabilistic Prediction via Scoring Rules” with Surer, Plumlee, and Guikema submitted.