My research interests lie in the intersection of simulation, stochastic optimization, and probabilistic data analysis. We pursue creating robust and fast tools for analysis and decision-making under uncertainty. We are actively involved in improving the computing (cyber)infrastructure for optimization in stochastic settings. We are also invested in bridging between the Monte Carlo perspective and machine learning.

All ongoing and prospective projects of the group can be viewed within two main thrusts:

(I) Efficiency and Reliability of Simulation Optimization Solvers

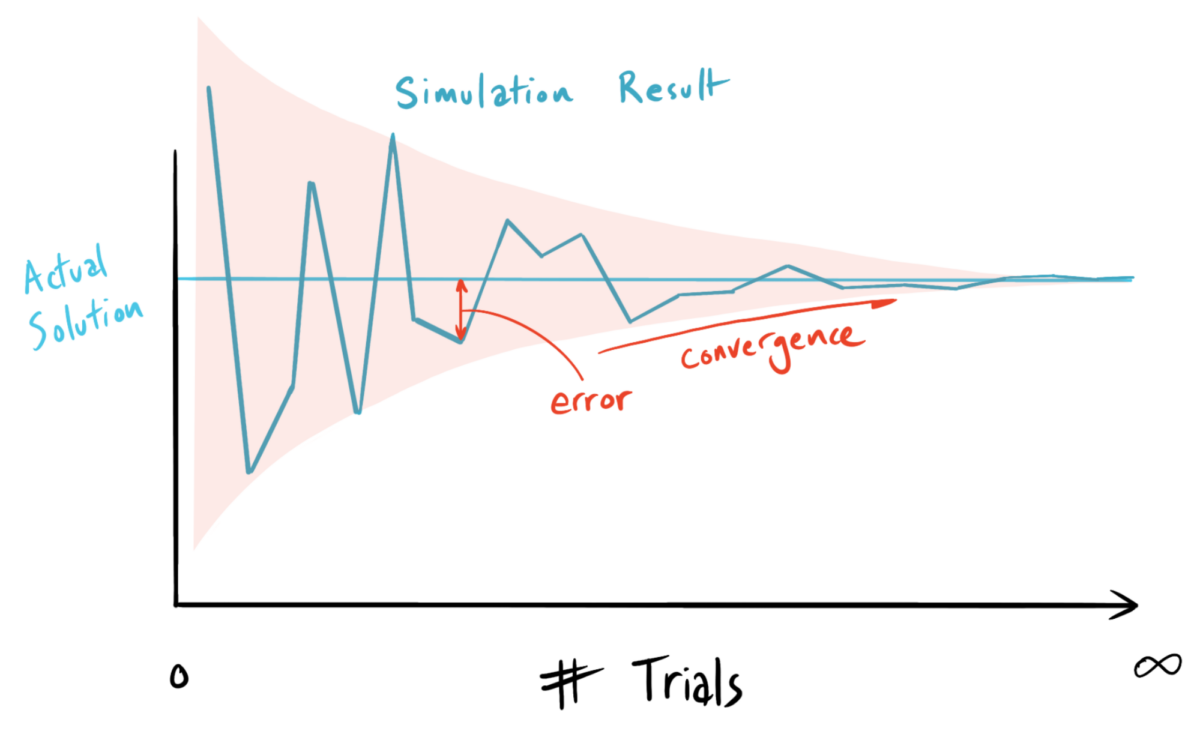

Finding optimal solutions to problems represented with stochastic simulations is hard. We seek designing robust and efficient simulation optimization solvers.

- The group’s primary focus area is local optimization of noisy, continuous, and especially derivative-free problems (given their relevance in simulation and black box contexts), with a recent attention to challenges within quantum computing applications. We have largely capitalized efforts in continued growth of adaptive sampling strategies (on the fly decision on the number of oracle replications at each point to guarantee almost sure convergence and optimal efficiency) for trust region algorithms. Trust regions are increasingly popular due to their flexibility for nonconvex stochastic functions. She aims to improve trust regions’ theoretical and practical properties for a broad range of problems (recently funded by an ONR grant). Some important research developments follow:

- Ha, Y., Shashaani, S., and Pasupathy, R., Complexity of Zeroth- and First-order Stochastic Trust-Region Algorithms, Under Review at SIAM Journal of Optimization, arXiv preprint arxiv.org/abs/2405.20116

- In this paper we propose a comprehensive framework to analyze the sample complexity (total number of oracle runs) of stochastic trust-region algorithms to solve nonlinear nonconvex smooth problems when the oracle only provides noisy function observations, or both noisy function and noisy (but unbiased) gradient vector observations. We establish a link between the sample size, the oracle order, the use of common random numbers, and stochastic sample path structure.

- Shashaani, S., Simulation Optimization: An Introductory Tutorial, In Proceedings of the 2024 Winter Simulation Conference. (Under Review)

- This paper provides perspective into the mathematical programming and approximation aspects of general algorithms in the Simulation Optimization area.

- Ha, Y., Shashaani, S., Iteration Complexity and Finite-Time Efficiency of Adaptive Sampling Trust-Region Methods for Stochastic Derivative-Free Optimization, IISE Transactions, doi.org/10.1080/24725854.2024.2335513

- The focus of this paper is on derivative-free contexts. On the one hand, the interpolation points for each iteration are selected using coordinate basis or rotated coordinate basis, whereby the dependency of required points on problem dimension reduces. On the other hand, direct search steps are permitted, when possible, to increase the probability of finding better solutions. Almost sure convergence results under these changes are obtained. Experiments with low-to-moderate-dimensional simulation problems provide insights into accelerated finite-time performance.

- Ha, Y., Shashaani, S., and Menickelly, M., Two-stage Sampling and Variance Modeling for Variational Quantum Algorithms, Under Minor Revisions at INFORMS Journal of Computing, arXiv preprint arxiv.org/abs/2401.08912

- Since adaptive sampling can be costly for quantum oracles due to the large communication cost, we introduce a two-step version of the stochastic trust region algorithm by means of a variance model. Trust regions use a model on the function values but this model on the variance values can help not only reduce the communication cost, but also discover the global optimum in the non-convex settings where variance vanishes at the globally optimal solution (this is indeed the case in many quantum computing cases).

- Ha, Y., Shashaani, S., Towards Greener Stochastic Derivative-Free Optimization with Trust Regions and Adaptive Sampling. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2022: IEEE.

- Ha, Y., Shashaani, S., and Tran-Dinh, Q., Improved Complexity of Trust-region Optimization for Zeroth-order Stochastic Oracles with Adaptive, In Proceedings of the 2021 Winter Simulation Conference, edited by Kim, S., Feng, B., Smith, K., Masoud, S., Zheng, Z., Szabo, C., and Loper, M., Piscataway, NJ, 2021: IEEE.

- Vasquez, D., Shashaani, S., and Pasupathy, R. ASTRO: Adaptive Sampling Trust-Region Optimization, A Class of Derivative-based Simulation Optimization Algorithms – Numerical Experiments. In Proceedings of the 2019 Winter Simulation Conference, edited by N. Mustafee, K.-H.G. Bae, S. Lazarova-Molnar, M. Rabe, C. Szabo, P. Haas, and Y.-J. Son, Piscataway, NJ, 2019: IEEE.

- Shashaani, S., Hashemi, F.S. and Pasupathy, R., ASTRO-DF: A class of adaptive sampling trust-region algorithms for derivative-free stochastic optimization. SIAM Journal on Optimization 28, no. 4 (2018): 3145-3176. doi.org/10.1137/15M1042425

- Shashaani, S., Hunter, S. R. and Pasupathy, R., 2016. ASTRO-DF: Adaptive Sampling Trust-Region Optimization Algorithms, Heuristics, and Numerical Experience. In Proceedings of the 2016 Winter Simulation Conference, edited by T.M.K. Roeder, P.I. Frazier, R. Szechtman, E. Zhou, T. Huschka, and S.E. Chick, Piscataway, NJ, 2016: IEEE.

- Ha, Y., Shashaani, S., and Pasupathy, R., Complexity of Zeroth- and First-order Stochastic Trust-Region Algorithms, Under Review at SIAM Journal of Optimization, arXiv preprint arxiv.org/abs/2405.20116

- Experienced with various challenges in simulation optimization, we have actively engaged in establishing strong infrastructure to help the development and improvement of existing tools and solvers in the area. One such effort has been revitalizing “SimOpt”, an open-source object-oriented library, testbed, and benchmarking platform. The library enjoys programmed new evaluation metrics carefully devised to address the solver’s probabilistic behavior in finite-time. New evaluation metrics for solvers that handle stochastic constraints and use of experimental design for solver tuning within this platform are other developing directions.

- Eckman, D.J., Shashaani, S., Henderson, S., SimOpt: A Testbed for Simulation-Optimization Experiments, INFORMS Journal on Computing, 35(2):495-508, 2023. doi.org/10.1287/ijoc.2023.1273

- Eckman, D.J., Shashaani, S., Henderson, S.G., Diagnostic Tools for Evaluating and Comparing Simulation-Optimization Algorithms, INFORMS Journal of Computing, 35(2):350-367, 2023. doi.org/10.1287/ijoc.2022.1261

- Shashaani, S., Eckman, D.J., Sanchez, S., Data Farming the Parameters of Simulation-Optimization Solvers, Under Second Review at AMC Transactions on Modeling and Computer Simulation (TOMACS)

- *Felice, N., Shashaani, S., Eckman, D.E., and Sanchez, S.S., Data Farming for Repeated Simulation Optimization Experiments, In Proceedings of the 2024 Winter Simulation Conference. (Under Review)

- Eckman, D.J., Henderson, S., Shashaani, S., Simulation Optimization with Stochastic Constraints. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2022: IEEE.

(II) Monte Carlo Simulation Methods for Machine Learning and Big Data

Learning from Big Data is computationally challenging. Monte Carlo theories glean distributional insights for interpretable prediction / prevention of high-risk events.

- For calibrating digital twins (a project supported by an NSF grant), we seek to enhance solvers’ ability to handle big data. This challenge, motivated by computer models that predict power generation in wind farms, has led to integrating the well-known variance reduction technique—stratified sampling—within continuous optimization. To maximally improve solver efficiency, how many samples is not the only main concern, but which samples also! And the goal is not to economize estimation of one function value, but the entire stochastic process that a solver generates. Therefore, both adaptive budget allocation and adaptive stratification may help with big data. Dynamic partitioning via binary trees is an approach we have taken on guided by an in-depth study of score-based probabilistic trees. We also recently adopted classical simulation methods such as control variates and concomitant variables for this purpose.

- Shashaani, S., Sürer, Ö., Plumlee, M. and Guikema, S., 2024. Building Trees for Probabilistic Prediction via Scoring Rules. Technometrics, pp.1-12. doi.org/10.1080/00401706.2024.2343062

- We propose training trees with metrics other than sum of squared errors based on the goal of the probabilistic prediction. We consider some of the widely used proper scoring rules, such as interval scores and continuously ranked probabilistic scores, and illustrate how they may be more successful in finding heterogeneity in the data. We provide theoretical results on the consistency of resulting trees and an easy-to-use software on GitHub.

- Jain, P., Shashaani, S., and Byon, E., Wake Effect Parameter Calibration with Large-Scale Field Operational Data using Stochastic Optimization, Applied Energy, 347: 121426, 2023. doi.org/10.1016/j.apenergy.2023.121426

- Jain, P., Shashaani, S., and Byon, E., Simulation Model Calibration with Dynamic Stratification and Adaptive Sampling, Under Second Review at Journal of Simulation. arXiv preprint arxiv.org/abs/2401.14558

- Park J., Byon E., Ko Y.M., and Shashaani S., Strata Design in Stochastic Simulations with Multivariate Inputs, Accepted at Technometrics, 2024.

- Jain, P. and Shashaani, S., Improved Complexity of Stochastic Trust-region Methods with Dynamically Stratified Adaptive Sampling, Expected Submission July 2024

- Jain, P., Shashaani, S. Stratification with Concomitant Variables in Stochastic Trust-region Optimization. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2023: IEEE.

- Jain, P., Shashaani, S., and Byon, E., Robust Simulation Optimization with Stratification, In Proceedings of the 2022 Winter Simulation Conference, edited by B. Feng, G. Pedrielli, Y. Peng, S. Shashaani, E. Song, C.G. Corlu, L.H. Lee, E.P. Chew, T.M.K. Roeder, and P. Lendermann. Piscataway, NJ, 2022: IEEE.

- Jeon, Y., Shashaani, S., Calibrating Digital Twins via Bayesian Optimization with a Root Finding Strategy, 2024 Winter Simulation Conference, Under Review.

- Shashaani, S., Sürer, Ö., Plumlee, M. and Guikema, S., 2024. Building Trees for Probabilistic Prediction via Scoring Rules. Technometrics, pp.1-12. doi.org/10.1080/00401706.2024.2343062

- In the machine learning realm, feature selection is a problem that we actively pursue to devise Monte Carlo-inspired algorithms for. A fundamental difference in our approach is viewing machine learning akin to calibration for a Monte Carlo simulation. This perspective has allowed handling the error in machine learning models via output analysis. A well-established simulation methodology called input uncertainty helps inform the training of bias effects in the model outputs. Accurate yet inexpensive bias estimators with nonparametric input uncertainty methods can ultimately benefit both simulation and machine learning communities. We investigate bias estimation with these goals in mind. Our new simulation optimization-based feature selection algorithms are stochastic binary solution methods that pursue the goals of being fast (via adaptive sampling) and robust (via bias correction). Solvers adopted range from genetic algorithms to nested partitioning. Case studies of the developed research have helped improve the interpretability and accuracy in prediction of additive manufacturing defects, avoidable hospitalization, and farms’ nutrients quality (awarded funding from the NC Department of Justice).

- Vahdat, K., Shashaani, S., and Eun, H.K., Robust Output Analysis with Monte-Carlo Methodology, arXiv preprint arxiv.org/abs/2207.13612

- We devises two computational methods to capture the nonlinear effect of bias on estimated performance (via second-order approximation). It has contributions for output analysis broadly and provides significant theoretical evidence on the advantage of bias correction. The challenge we wish to tackle is to attain better accuracy for the bias-corrected estimator but also reduce its variance. We accomplish this by means a conditional warp-speed double bootstrapping idea.

- Shashaani, S., and Vahdat, K., Monte Carlo based Machine Learning, In Proceedings of the 2022 International Conference on Operations Research, Heidelberg: Springer.

- This paper proposes a fundamentally different view to machine learning, that is similar to simulation model calibration. Given a set of model parameters, the prediction error or loss produced by the model that was trained and tested by a pair of random datasets is only one replication of several needed to estimate the expected performance of that set of parameters.

- Vahdat, K., Shashaani, S., Adaptive Robust Genetic Algorithms with Ranking and Selection. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2023: IEEE

- Shashaani, S., Vahdat, K., Simulation Optimization based Feature Selection. Optimization and Engineering, 24:1183–1223, 2023. doi.org/10.1007/s11081-022-09726-3

- This paper formulates feature selection among the large set of features (variables or covariates) in a given dataset as an optimization under uncertainty, where the uncertainty comes from the fact that even using all of the dataset, does not represent its true distributional behavior. Besides posing the problem and describing the procedures to solve it with simulation optimization (in this case using genetic algorithms), the paper also argues that as with other simulation optimization algorithms, the number of replications can be more intelligently selected using proxies of optimality to improve the algorithm’s performance.

- Vahdat, K., and Shashaani, S., Non-parametric Uncertainty Bias and Variance Estimation via Nested Bootstrapping and Influence Functions, In Proceedings of the 2021 Winter Simulation Conference, edited by S. Kim, B. Feng, K. Smith, S. Masoud, Z. Zheng, C. Szabo, and M. Loper, Piscataway, NJ, 2021: IEEE.

- Vahdat, K., Shashaani, S., Simulation Optimization Based Feature Selection, a Study on Data-driven Optimization with Input Uncertainty, In Proceedings of the 2020 Winter Simulation Conference, edited by K.G. Bae, B. Feng, B., S. Kim, S. Lazarova-Molnar, Z. Zheng, T.M.K. Roeder, and R. Thiesing, Piscataway, NJ, 2020: IEEE.

- Houser, E., Shashaani, S., Harrysson, O., and Jeon, Y., Predicting Additive Manufacturing Defects with Feature Selection for Imbalanced Data, IISE Transactions, 2023. doi.org/10.1080/24725854.2023.2207633

- In this paper we use the vast amounts of data collected — enormous log files and camera images — during an additive manufacturing process to find and prevent defects as the build progresses, rather than after it is finished (the current practice, which is quite expensive). In additive manufacturing with electron beam machines, there are many variables to monitor, and the observed defects are rare. Unlike the laser-based practices, these machines are complete black boxes with no simulation models available. The generated noisy data can only be used via machine learning and provides a perfect opportunity to test the feature selection ideas using simulation optimization. We treat the data from log files as just one possible version of many and consider the generated prediction errors (from selected features) as random outputs linked with training and test data as the random inputs. We then replicate (via bootstrapping) to estimate expected performance. Indeed, our recommended features following this methodology are more successful in capturing the true trends compared to other common techniques.

- Houser, E., Shashaani, S., Rapid Screening and Nested Partitioning for Feature Selection, 2024 Winter Simulation Conference, Under Review.

- Alizadeh, N., Vahdat, K., Shashaani, S., Ozaltin, O., Swann, J., Personalized Predictions for Unplanned UTI Hospitalization due to Urinary Tract Infection, Accepted at PLOS ONE

- Mao, L., Vahdat, K., Shashaani, S., Swann, J., Personalized Predictions for Unplanned Urinary Tract Infection Hospitalizations with Hierarchical Clustering, In 2020 INFORMS Conference on Service Science Proceedings.

- Shashaani, S., Guikema, S.D., Pino, J.V. and Quiring, S.M., Multi-Stage Prediction for Zero-Inflated Hurricane Induced Power Outages. IEEE Access, 6: 62432-62449, 2018. 2018. doi.org/10.1109/ACCESS.2018.2877078

- Vahdat, K., Shashaani, S., and Eun, H.K., Robust Output Analysis with Monte-Carlo Methodology, arXiv preprint arxiv.org/abs/2207.13612