The group’s research interests lie in the intersection of simulation, stochastic optimization, and probabilistic data analysis. We pursue creating robust and fast tools for analysis and decision-making under uncertainty. We are actively involved in improving the computing (cyber)infrastructure for optimization in stochastic settings. We are also invested in bridging between the Monte Carlo perspective and machine learning.

All ongoing and prospective projects of the group can be viewed within two main thrusts:

[click on text to see related publications, and click on each paper to see a short summary]

(I) Efficiency and Reliability of Simulation(-based) Optimization Algorithms

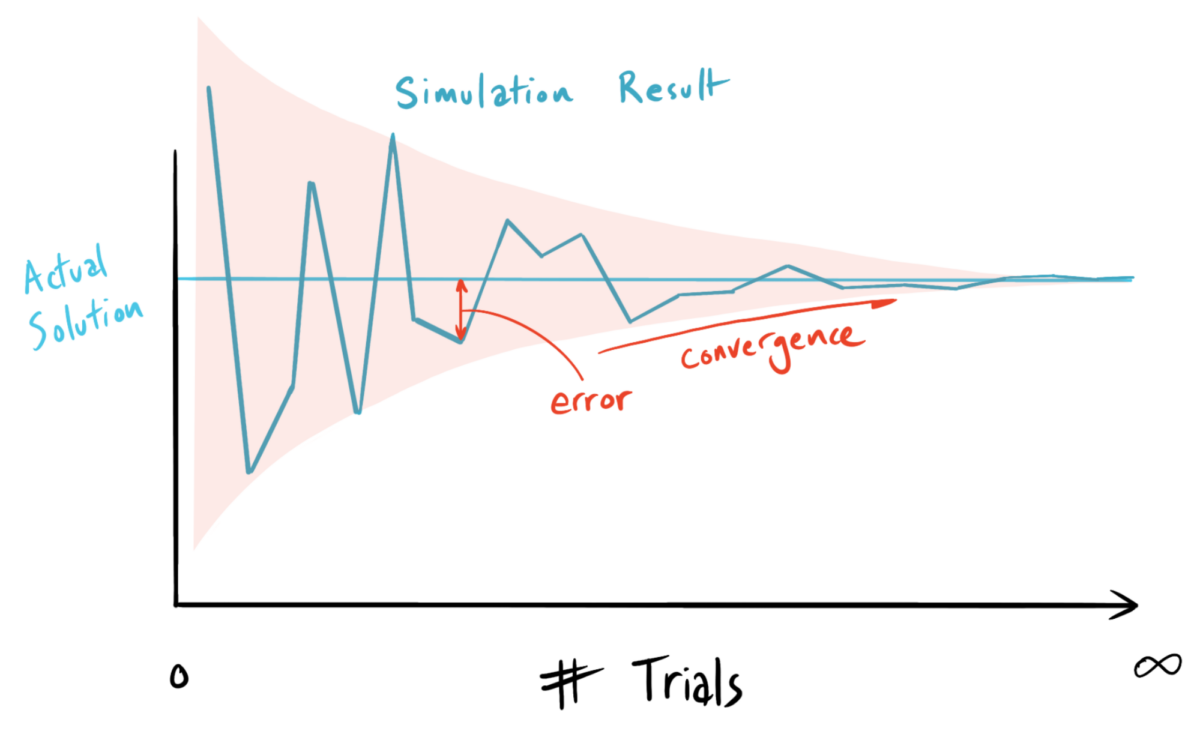

Finding optimal solutions to problems represented with stochastic simulations is hard. We seek designing robust and efficient simulation optimization algorithms.

I.A) The group’s primary focus area is local optimization of noisy, continuous, and especially derivative-free problems (given their relevance in simulation and black box contexts), with a recent attention to challenges within quantum computing applications. We have largely capitalized efforts in continued growth of adaptive sampling strategies–on the fly selection of number of oracle replications at each point to guarantee almost sure convergence and optimal efficiency–for trust region algorithms. Trust regions are increasingly popular due to their flexibility for nonconvex stochastic functions. We aim to improve trust regions’ theoretical and practical properties for a broad range of problems (recently funded by an ONR grant).

Ha, Y., Shashaani, S., Tran-dinh, Q., Regularized Adaptive Sampling Trust Region Methods for Stochastic Nonconvex Optimization,Expected Submission to Journal of Machine Learning Research, Feb 2025.

Continuous simulation optimization is challenging due to its derivative-free and often nonconvex noisy setting. Trust-region methods have proven remarkable robustness for this class of problems. Each iteration of a trust-region method involves constructing a local model via interpolation or regression within a neighborhood of the current best solution that helps verify sufficient reduction in the function estimate when determining the next iterate. When the local model approximates the function well, larger neighborhoods are advantageous for faster progress. Conversely, unsuccessful approximations can be corrected by contracting the neighborhood. Traditional trust-region methods can be slowed down by incremental contractions that lead to numerous unnecessary iterations and significant simulation cost towards convergence to a stationary point. We propose a unified regime for adaptive sampling trust-region optimization (ASTRO) that can enjoy faster convergence in both iteration count and sampling effort by employing quadratic regularization and dynamically adjusting the trust-region size based on gradient estimates.

Ha, Y., Shashaani, S., and Pasupathy, R., Complexity of Zeroth- and First-order Stochastic Trust-Region Algorithms, Under Seoncd Review at SIAM Journal of Optimization, arXiv preprint arxiv.org/abs/2405.20116

We propose a comprehensive framework to analyze the sample complexity (total number of oracle runs) of stochastic trust-region algorithms to solve nonlinear nonconvex smooth problems when the oracle only provides noisy function observations, or both noisy function and noisy (but unbiased) gradient vector observations. We establish a link between the sample size, the oracle order, the use of common random numbers, and stochastic sample path structure.

Shashaani, S., Dzahini, J., Cartis, C., Randomized Subspaces for Derivative-free Adaptive Sampling Trust-region Optimization, Expected Submission to Optimization Methods and Software (OMS), Dec 2024.

A new algorithm based on adaptive sampling trust region optimization (ASTRO) that is suitable for high-dimensional decision spaces where the objective function is noisy and only available through a zeroth order stochastic oracle. The use of randomized subspaces in this study is via checkable criteria and the convergence and complexity analysis guarantees competitive performance.

Shashaani, S., Simulation Optimization: An Introductory Tutorial on Methodology, In Proceedings of the 2024 Winter Simulation Conference. (To Appear)

This paper provides perspective into the mathematical programming and approximation aspects of general algorithms in the Simulation Optimization area.

Jain, P. and Shashaani, S., Improved Complexity of Stochastic Trust-region Methods with Dynamically Stratified Adaptive Sampling, Expected Submission Jan 2025

We prove with probability 1 that using stratified adaptive sampling can lower complexity and improve sample-efficiency in stochastic trust region algorithms.

Ha, Y., Shashaani, S., Iteration Complexity and Finite-Time Efficiency of Adaptive Sampling Trust-Region Methods for Stochastic Derivative-Free Optimization, IISE Transactions, doi: 10.1080/24725854.2024.2335513

In derivative-free contexts, we propose to interpolate around an incumbent solution using coordinate basis or rotated coordinate basis, to reduce dependency on problem dimension. We also use direct search steps, when possible, to increase the probability of finding better solutions. Our new scheme converges almost surely and demonstrates accelerated performance in low-to-moderate-dimensional simulation problems.

Ha, Y., Shashaani, S., and Menickelly, M., Two-stage Sampling and Variance Modeling for Variational Quantum Algorithms, Accepted in INFORMS Journal of Computing, arXiv preprint arxiv.org/abs/2401.08912

Adaptive sampling can be costly for quantum oracles due to the large communication cost. We introduce a two-step version of the stochastic trust region algorithm by means of a variance model that will work alongside the local model trust regions use to final the next incumbent. A variance model can also help discover the global optimum in the non-convex settings where variance vanishes at the globally optimal solution (this is indeed the case in many quantum computing cases).

Ha, Y., Shashaani, S., Towards Greener Stochastic Derivative-Free Optimization with Trust Regions and Adaptive Sampling. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2022: IEEE. doi: 10.1109/WSC60868.2023.10408143

We show alternating between direct search and local search using interpolation reduces the rate at which the trust-region radius shrinks and therefore prevents the simulation cost to rapidly increase at the early stages of the search.

Ha, Y., Shashaani, S., and Tran-Dinh, Q., Improved Complexity of Trust-region Optimization for Zeroth-order Stochastic Oracles with Adaptive, In Proceedings of the 2021 Winter Simulation Conference, edited by Kim, S., Feng, B., Smith, K., Masoud, S., Zheng, Z., Szabo, C., and Loper, M., Piscataway, NJ, 2021: IEEE. doi: 10.1109/WSC52266.2021.9715529

Our proposed construction of quadratic local models with diagonal Hessians that require interpolation at O(d) points instead of O(d2) points, where d is the dimension of the search space, significantly enhances our ability to solve higher dimensional simulation optimization problems.

Vasquez, D., Shashaani, S., and Pasupathy, R. ASTRO: Adaptive Sampling Trust-Region Optimization, A Class of Derivative-based Simulation Optimization Algorithms – Numerical Experiments. In Proceedings of the 2019 Winter Simulation Conference, edited by N. Mustafee, K.-H.G. Bae, S. Lazarova-Molnar, M. Rabe, C. Szabo, P. Haas, and Y.-J. Son, Piscataway, NJ, 2019: IEEE. doi: 10.1109/WSC40007.2019.9004904

When first-order information (unbiased derivatives) are available, we can adjust our adaptive sample size selection as the algorithm runs to exploit additional knowledge about the problem. Our comparisons for with first-order methods evidence improvement.

Shashaani, S., Hashemi, F.S. and Pasupathy, R., ASTRO-DF: A class of adaptive sampling trust-region algorithms for derivative-free stochastic optimization. SIAM Journal on Optimization 28, no. 4 (2018): 3145-3176. doi: 10.1137/15M1042425

Trust region methods are the most reliable in practice when used for non-convex stochastic problems. But their progress is slow in part because the stochastic noise can dominate and derail the algorithm as incumbents nears a locally optimal solution. Our new adaptive sampling scheme, with which the algorithms converges with probability 1, decides to add a new sample (simulation run) with the most recent information received from the oracle. It naturally increases the sample size farther in the search.

Shashaani, S., Hunter, S. R. and Pasupathy, R., 2016. ASTRO-DF: Adaptive Sampling Trust-Region Optimization Algorithms, Heuristics, and Numerical Experience. In Proceedings of the 2016 Winter Simulation Conference, edited by T.M.K. Roeder, P.I. Frazier, R. Szechtman, E. Zhou, T. Huschka, and S.E. Chick, Piscataway, NJ, 2016: IEEE. doi: 10.1109/WSC.2016.7822121

Many implementation questions for adaptive sample size selection are addressed; for example, how to select interpolation points, and how to choose algorithm constants.

I.B) Experienced with various challenges in simulation optimization, we have actively engaged in establishing strong infrastructure to help the development and improvement of existing tools and solvers in the area. One such effort has been revitalizing “SimOpt”, an open-source object-oriented library, testbed, and benchmarking platform (recently funded by an NSF grant). The library enjoys new evaluation metrics carefully devised to address the solver’s probabilistic behavior in finite-time as well as their handling of stochastic constraints. Experimental design procedures tune hyper-parameters and can help in other developing directions.

Sanchez, S., Shashaani, S., Eckman, D.J., Changing the Paradigm: Learning Deeply About Sequential Optimization Algorithms via Data Farming, Expected Submission to Journal of Machine Learning Research, Jan 2025.

An extensive experimentation to find relationships between a solver’s hyperparameters, and the optimization problem’s structure, dimension and multi-mode laity: a case study on simulated annealing algorithm in R.

Eckman, D.J., Shashaani, S., Henderson, S., SimOpt: A Testbed for Simulation-Optimization Experiments, INFORMS Journal on Computing, 35(2):495-508, 2023. doi: 10.1287/ijoc.2023.1273

Main features in the SimOpt library are described, including random number management and error estimation in a two-level simulation. Data structures used in the open-source framework are also explained.

Felice, N., Eckman, D.J., Shashaani, S., Henderson, S., Comparing Solvers for Stochastic Optimization Problems with Stochastic Constraints, Expected Submission to Math Programming Computation, Jan 2025.

New features in the SimOpt library that allow for new metrics and plots for evaluation, comparison, and diagnostics of solvers that solve continuous simulation optimization problems with stochastic constraints.

Eckman, D.J., Shashaani, S., Henderson, S.G., Diagnostic Tools for Evaluating and Comparing Simulation-Optimization Algorithms, INFORMS Journal of Computing, 35(2):350-367, 2023. doi: 10.1287/ijoc.2022.1261

We propose many new performance metrics to evaluate success of a simulation optimization algorithm and contrast them with their counterparts in the deterministic optimization contexts.

Shashaani, S., Eckman, D.J., Sanchez, S., Data Farming the Parameters of Simulation-Optimization Solvers, AMC Transactions on Modeling and Computer Simulation (TOMACS), Volume 34, Issue 4, 29 pages, 2024. doi: 10.1145/3680282

Large-scale experimentation can provide valuable insights for choosing important constants in an optimization algorithm. For simulation optimization, since there are multiple layers of randomness, this process becomes cumbersome. We establish a framework via data farming and test on ASTRO-DF and multiple problems.

*Felice, N., Shashaani, S., Eckman, D.E., and Sanchez, S.S., Data Farming for Repeated Simulation Optimization Experiments, In Proceedings of the 2024 Winter Simulation Conference. (To Appear)

For contexts where optimization procedure needs to be repeated since problem factors such as costs change on short-intervals, can large-scale experimentations be used to help adjust optimal solutions quickly? We investigate this with data farming and SimOpt.

Eckman, D.J., Henderson, S., Shashaani, S., Stochastic Constraints: How Feasible is Feasible? In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2022: IEEE. doi: 10.1109/WSC60868.2023.10408734

Many simulation optimization problems have constraints that are stochastic and need simulation outputs to estimate feasibility. This is an open and active area of research. We propose metrics to evaluate algorithms in this context.

(II) Monte Carlo Simulation Methods for Machine Learning and Big Data

Learning from Big Data is computationally challenging. Monte Carlo theories glean distributional insights for interpretable prediction / prevention of high-risk events.

II.A) For calibrating digital twins (a project supported by an NSF grant), we seek to enhance solvers’ ability to handle big data. This challenge, motivated by computer models that predict power generation in wind farms, has led to integrating the well-known variance reduction technique—stratified sampling—within continuous optimization. To maximally improve solver efficiency, how many samples is not the only main concern, but which samples also! And the goal is not to economize estimation of one function value, but rather the estimation of function value of the entire trajectory of incumbents. Therefore, both adaptive budget allocation and adaptive stratification may help with big data. We exploit dynamic partitioning via binary trees, guided by an in-depth study of score-based probabilistic trees, and a classical simulation method based on control variates for this purpose. We also prove the impact of well-implemented stratified adaptive sampling in the overall complexity (computational cost) of an optimization algorithm.

Shashaani, S., Sürer, Ö., Plumlee, M. and Guikema, S., Building Trees for Probabilistic Prediction via Scoring Rules. Technometrics, 2024. doi: 10.1080/00401706.2024.2343062

We propose training trees for probabilistic prediction via interval scores and continuously ranked probabilistic scores and prove their consistency. With easy-to-use software on GitHub, we illustrate the success of these trees in finding heterogeneity in the data.

Jain, P., Shashaani, S., and Byon, E., Wake Effect Parameter Calibration with Large-Scale Field Operational Data using Stochastic Optimization, Applied Energy, 347: 121426, 2023. doi: 10.1016/j.apenergy.2023.121426

In wind farms, there is a deficit (wake) of wind speed in downstream turbines that reduces their generated power. Learning this deficit (which can vary based on changes in wind patterns) is important in making better predictions of wind power generation. But it is difficult because of large-scale data. With stratified sampling we adaptively use smaller subsets of data to conduct this calibration efficiently and demonstrate success using old and recent engineering models used in wind energy practice.

Jain, P., Shashaani, S., and Byon, E., Simulation Model Calibration with Dynamic Stratification and Adaptive Sampling, Accepted in Journal of Simulation, 2024. doi: 10.1080/17477778.2024.2420807

Does changing the strata during optimization help find better solutions? We investigate this question with a new procedure that uses tree-based and regression-based mechanisms to do so, and support it with experimenting on synthetic and real data.

Jain, P. and Shashaani, S., Improved Complexity of Stochastic Trust–region Methods with Dynamically Stratified Adaptive Sampling, Expected Submission to Management Science, Dec 2024.

With help from inverse of empirical cumulative distribution functions and stratifying the uniform primitive that generates (draws) the random inputs for sampling, we prove that dynamic stratification with adaptive sampling significantly improves the sample complexity of ASTRO algorithms.

Park J., Byon E., Ko Y.M., and Shashaani S., Strata Design for Variance Reduction in Stochastic Simulation, Technometrics, 2024. dot: 10.1080/00401706.2024.2416411

For single estimation, it is cumbersome to find the right structure of strata when the input space is large. Yet, it can make a significant impact in the amount of variance reduction. We extend our provably optimal procedure to do so for high-dimensional inputs via trees.

Jain, P., Shashaani, S. Dynamic Stratification and Post-Stratified Adaptive Sampling for Simulation Optimization. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2023: IEEE. doi: 10.1109/WSC60868.2023.10408173

Simulations provide more data than the objective function values. With appropriate prior knowledge and correlating these concomitant data, we can improve stratification of input data for sample efficiency.

Jain, P., Shashaani, S., and Byon, E., Robust Simulation Optimization with Stratification, In Proceedings of the 2022 Winter Simulation Conference, edited by B. Feng, G. Pedrielli, Y. Peng, S. Shashaani, E. Song, C.G. Corlu, L.H. Lee, E.P. Chew, T.M.K. Roeder, and P. Lendermann. Piscataway, NJ, 2022: IEEE. doi: 10.1109/WSC57314.2022.10015515

Stratifying the input data when estimation simulation outputs used within optimization can be shown to improve robustness in solutions and reduce algorithms run-to-run variability.

Jeon, Y., Shashaani, S., Calibrating Digital Twins via Bayesian Optimization with a Root Finding Strategy, In Proceedings of the 2024 Winter Simulation Conference. (To Appear)

With a flow of incoming data, calibrating models becomes expensive. We propose a novel way to adjust the widely-used Bayesian Optimization techniques to do so efficiently.

II.B) In the machine learning realm, we continue devising feature selection algorithms inspired by Monte Carlo. A fundamental difference in our approach is viewing machine learning akin to calibration for a Monte Carlo simulation. This perspective has allowed handling the error in machine learning models via output analysis. A well-established simulation methodology called input uncertainty helps inform the bias effects from data on the model outputs. Accurate yet inexpensive bias estimators applicable to high-dimensional input spaces can ultimately benefit both simulation and machine learning communities. With the goals of being fast (via adaptive sampling) and robust (via bias correction), we have adopted two stochastic binary solution methods based on Genetic Algorithms and Nested Partitioning algorithms. The resulting selected features have improved the interpretability and accuracy in prediction of additive manufacturing defects, avoidable hospitalization, and farms’ nutrients quality (awarded funding from the NC Department of Justice).

Eun, H.K., Shashaani, S., and Vahdat, K., Robust Prediction with Efficient Bias Correction, Under Review at Operations Research, arXiv preprint arxiv.org/abs/2207.13612

We devises two computational methods to capture the nonlinear effect of bias on estimated performance (via second-order approximation). It has contributions for output analysis broadly and provides significant theoretical evidence on the advantage of bias correction. The challenge we wish to tackle is to attain better accuracy for the bias-corrected estimator but also reduce its variance. We accomplish this by means a conditional warp-speed double bootstrapping idea.

Eun, H.K., Shashaani, S., and Barton, R.R., Comparative Analysis of Distance Metrics for Distributionally Robust Optimization in Queuing Systems: Wasserstein vs. Kingman, In Proceedings of the 2024 Winter Simulation Conference, edited by H. Lam, E. Azar, D. Batur, W. Xie, S.R. Hunter, and M. D. Rossetti. Piscataway, NJ, 2024: IEEE. (To Appear)

We propose a moment-based Kingman distance, an approximation of mean waiting time in G/G/1 queues, to determine the ambiguity set. We demonstrate that the Kingman distance provides a straightforward and efficient method for identifying worst-case scenarios for simple queue settings. In contrast, the Wasserstein distance requires exhaustive exploration of the entire ambiguity set to pinpoint the worst-case distributions. These findings suggest that the Kingman distance could offer a practical and effective alternative for DRO applications in some cases.

Jeon, Y., Chu, Y., Pasupathy, P., and Shashaani, S., Uncertainty Quantification using Simulation Output: Batching as an Inferential Device, Journal of Simulation, 2024. doi: 10.1080/17477778.2024.2425311

Batching is a remarkably simple and effective device for quantifying uncertainty in estimates from simulation experiments and especially powerful for handling dependent output data which frequently appears in simulation contexts. We demonstrate that with appropriately chosen batch numbers and extent of overlapif the number of batches and the extent of their overlap are chosen appropriately, batching retains bootstrap’s attractive theoretical properties of strong consistency and higher-order accuracy.

Shashaani, S., and Vahdat, K., Monte Carlo based Machine Learning, In Proceedings of the 2022 International Conference on Operations Research, Heidelberg: Springer. doi: 10.1007/978-3-031-24907-5_75

This paper proposes a fundamentally different view to machine learning, that is similar to simulation model calibration. Given a set of model parameters, the prediction error or loss produced by the model that was trained and tested by a pair of random datasets is only one replication of several needed to estimate the expected performance of that set of parameters.

Vahdat, K., Shashaani, S., Adaptive Ranking and Selection Based Genetic Algorithms for Data-Driven Problems. In Proceedings of the 2023 Winter Simulation Conference, edited by C.G. Corlu, S.R. Hunter, H. Lam, B.S. Onggo, J. Shortle, and B. Biller. Piscataway, NJ, 2023: IEEE. doi: 10.1109/WSC60868.2023.10408610

Incorporating bias estimation can make the result of ranking and selection (e.g., to select best features in a dataset) more robust. By they can be computationally expensive. We devise an adaptive sampling technique that adds new replications to only those input models (bootstraps) that appear to contribute the most to the overall variance.

Shashaani, S., Vahdat, K., Simulation Optimization based Feature Selection. Optimization and Engineering, 24:1183–1223, 2023. doi: 10.1007/s11081-022-09726-3

This paper formulates feature selection among the large set of features (variables or covariates) in a given dataset as an optimization under uncertainty, where the uncertainty comes from the fact that even using all of the dataset, does not represent its true distributional behavior. Besides posing the problem and describing the procedures to solve it with simulation optimization (in this case using genetic algorithms), the paper also argues that as with other simulation optimization algorithms, the number of replications can be more intelligently selected using proxies of optimality to improve the algorithm’s performance.

Vahdat, K., and Shashaani, S., Non-parametric Uncertainty Bias and Variance Estimation via Nested Bootstrapping and Influence Functions, In Proceedings of the 2021 Winter Simulation Conference, edited by S. Kim, B. Feng, K. Smith, S. Masoud, Z. Zheng, C. Szabo, and M. Loper, Piscataway, NJ, 2021: IEEE. doi: 10.1109/WSC52266.2021.9715420

We propose methods based on nonparametric statistics to estimate bias in model outputs. Since the bias estimates have higher variance, we integrate variance reduction to make them more sample-efficient.

Vahdat, K., Shashaani, S., Simulation Optimization Based Feature Selection, a Study on Data-driven Optimization with Input Uncertainty, In Proceedings of the 2020 Winter Simulation Conference, edited by K.G. Bae, B. Feng, B., S. Kim, S. Lazarova-Molnar, Z. Zheng, T.M.K. Roeder, and R. Thiesing, Piscataway, NJ, 2020: IEEE. doi: 10.1109/WSC48552.2020.9383862

We view bootstrap-based bias prediction in the data as an input uncertainty tool. With this viewpoint, we tackle feature selection as a binary simulation optimization (via Genetic Algorithms) that handles input uncertainty in each step.

Houser, E., Shashaani, S., Harrysson, O., and Jeon, Y., Predicting Additive Manufacturing Defects with Feature Selection for Imbalanced Data, IISE Transactions, 2023. doi: 10.1080/24725854.2023.2207633

In this paper we use the vast amounts of data collected — enormous log files and camera images — during an additive manufacturing process to find and prevent defects as the build progresses, rather than after it is finished (the current practice, which is quite expensive). In additive manufacturing with electron beam machines, there are many variables to monitor, and the observed defects are rare. Unlike the laser-based practices, these machines are complete black boxes with no simulation models available. The generated noisy data can only be used via machine learning and provides a perfect opportunity to test the feature selection ideas using simulation optimization. We treat the data from log files as just one possible version of many and consider the generated prediction errors (from selected features) as random outputs linked with training and test data as the random inputs. We then replicate (via bootstrapping) to estimate expected performance. Indeed, our recommended features following this methodology are more successful in capturing the true trends compared to other common techniques.

Houser, E., Shashaani, S., Rapid Screening and Nested Partitioning for Feature Selection, In Proceedings of the 2024 Winter Simulation Conference. (To Appear)

We devise a fast nested partitioning algorithms that uses a screened feature space to find optimal ones. In doing so we reduce the computational time in order of magnitudes.

Alizadeh, N., Vahdat, K., Shashaani, S., Ozaltin, O., Swann, J., Risk Score Models for Urinary Tract Infection Hospitalization, PLOS ONE 19.6: e0290215., 2024. doi: 10.1371/journal.pone.0290215

How can we build interpretable prediction models to inform healthcare experts of risks of hospitalization using large-scale history of health data from a population? We predict risk scores by finding the most influential attributes in individuals from different clusters of the population. The results are simpler models that have better accuracy and generalization, yet most importantly transparent and trustworthy by subject matter experts.

Mao, L., Vahdat, K., Shashaani, S., Swann, J., Personalized Predictions for Unplanned Urinary Tract Infection Hospitalizations with Hierarchical Clustering, In 2020 INFORMS Conference on Service Science Proceedings. doi: 10.1007/978-3-030-75166-1_34

When learning patterns from a high-scale data of an entire population, we propose a more personalized approach by finding criteria that segment the population in different behavioral patterns and therefore different care requirements. We then train separate models for each cluster and show improvements in prediction accuracy for UTI cases.

Shashaani, S., Guikema, S.D., Pino, J.V. and Quiring, S.M., Multi-Stage Prediction for Zero-Inflated Hurricane Induced Power Outages. IEEE Access, 6: 62432-62449, 2018. 2018. doi: 10.1109/ACCESS.2018.2877078

Power outages caused by hurricanes can be predicted before landfall to help restoration efforts. However, they are a difficult classification challenge as training data in high geographical resolutions and time interval are often extremely imbalanced with less than 1% outage events. Our proposed three-stage prediction tackles this challenge and prove successful in recent hurricane events in comparison to existing methods.